The NVIDIA Blog | |

| Exascale: An Innovator’s Dilemma Posted: 15 Nov 2011 03:07 PM PST NVIDIA CEO Jen-Hsun Huang gave an electrifying opening keynote today at SC11, the annual gathering of the international supercomputing community, as it gears up for the industry's next grand challenge: exascale computing. He began his presentation by discussing Clayton Christensen, the Harvard Business School professor who literally wrote the book on innovation, The Innovator's Dilemma. Christensen gave the keynote at last year's event, SC10, where he shared the basic principles of his theory, which at its simplest states that disruptive technologies are not initially deemed valuable to the markets they eventually serve. Disruptive technologies are typically cheaper, less advanced and spring from low-margin markets. By their nature, they fly in the face of the forces that govern large, established companies. And yet history is on their side. The steady progression of the computing industry – from mainframes to mini computers to workstations to PCs to today's mobile devices – can be read as successive waves of disruptive innovation. Huang described how Christensen's model applies to the supercomputing industry and the processors at its core. The limitations of power

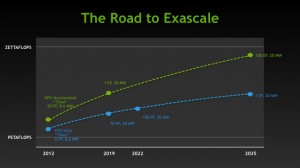

For much of the past two decades, computer scientists were able to steadily improve the supercomputer performance by scaling out ever-larger CPU-powered systems. However, Huang argued that this approach has hit a wall. Due to the energy required to power them, it's no longer practical to scale out CPU-only systems. And in the context of the exascale challenge, power is paramount. To achieve the goal of 1 exaflop at 20 megawatts by 2019, a CPU-only system would arrive more than 15 years late, in 2035. "Energy efficiency is our single greatest imperative," said Huang. Enter the GPU. While CPUs are comprised of a handful of cores optimized for linear tasks, GPUs are comprised of many cores optimized for parallel computing and are dramatically more energy efficient. However, the CPU's linear skills are vital to the mainstream supercomputing applications today, and GPUs perform these functions poorly. They underperform today, but hold the promise to solve supercomputing's most fundamental issue. These conditions have all the makings of the innovator's dilemma… GPU: A disruptive innovation, again and againThroughout its evolution, the GPU has followed Christensen's model. It began life as the engine of computer graphics for PC games in 1998. This was at a time when workstations represented the mainstream for computer graphics, and PC gaming was a fringe market. The stuff of teenagers. And something that could be safely ignored by the more serious business the workstation market represented. However, it turned out that there were a lot of teenagers who liked to play games. Then along came Quake, a game that had mass appeal. PC gaming took off. The market has continued to grow ever since, and along with it, the R&D budget for the GPU. In fact, the GPU became so good at computer graphics that by 2010, just 12 years after its invention, it was the de facto standard for the workstation market that once ignored it. A happy accident occurred along the way. Computer graphics requires the ability to solve a math problem known as a floating point operation, or a FLOP. As it turns out, FLOPs are the lingua franca of researchers and scientists who use supercomputers. So, today, the GPU stands to expand into an altogether new market. Coupled together, the CPU and GPU can redefine the supercomputing industry and pave the way to exascale. The good news: the core markets the GPU serves – professional graphics, gaming, and mobile devices – are enormous and growing. In another of Christensen's terms, the GPU is a sustainable innovation. In the field of supercomputing, GPUs have come a long way fast. First deployed in this capacity just a few years ago, today they power three of the world's top five supercomputers. And in the latest Top500 list of supercomputers, published Monday, the number of GPU-powered systems more than tripled in just a year, to 35. To make GPU computing more accessible to more people, Huang highlighted OpenACC, a new parallel-programming standard backed by NVIDIA, Cray Inc., the Portland Group (PGI), and CAPS. Exascale: The journey is the rewardHuang closed his presentation by coming full circle. In a series of examples, he demonstrated the dramatic impact exascale processors will have on the rest of the industry, from mobile devices to gaming consoles to workstations. If we reach exascale in 2019, five-watt mobile devices will be able to achieve reach a few teraflops, equivalent to the world's fastest supercomputer in 1997. Under the same conditions, a 100-watt gaming console will be able to achieve "tens" of teraflops, equivalent to the top supercomputer in 2004. And a 1,000-watt workstation would hit "hundreds" of teraflops, equal to the BlueGene at Lawrence Livermore National Labs just five years ago, when it was the fastest supercomputer in the world. |

| You are subscribed to email updates from NVIDIA To stop receiving these emails, you may unsubscribe now. | Email delivery powered by Google |

| Google Inc., 20 West Kinzie, Chicago IL USA 60610 | |

No comments:

Post a Comment